Facial Recognition, NYC Surveillance, Discovery Reform, Digital Forensics Discovery (Part III) & More

Vol. 4, Issue 1

January 9, 2023

Welcome to Decrypting a Defense, the monthly newsletter of the Legal Aid Society’s Digital Forensics Unit. In this issue, Shane Ferro discusses the expanded use of facial recognition by private entities. Benjamin Burger examines surveillance technology and oversight in New York City. Joel Schmidt explains why discovery reform necessarily includes being able to access the discovery. Finally, Jerome Greco takes up Part III of our series on digital discovery.

The Digital Forensics Unit of the Legal Aid Society was created in 2013 in recognition of the growing use of digital evidence in the criminal legal system. Consisting of attorneys and forensic analysts and examiners, the Unit provides support and analysis to the Criminal, Juvenile Rights, and Civil Practices of the Legal Aid Society.

In the News

In NYC, Everyone Knows Your Face

Shane Ferro, Digital Forensics Staff Attorney

Most of the discussion of facial recognition in the last few years has concentrated on the state use of that technology, mostly police using it to identify suspects in crime investigations (correctly or incorrectly, as it might be). However, multiple stories this month have highlighted the increasing frequency with which private parties are making use of the technology to identify and retaliate against their perceived enemies.

The most viral of the stories is the New Jersey mom who was not allowed to use the ticket she’d purchased to see the “Christmas Spectacular” at Radio City Music Hall with her 9-year-old daughter’s Girl Scout Troop. The space is owned by Madison Square Garden Entertainment and James Dolan (yes, the guy who has run the Knicks into the ground for over 25 years, dubbed a “a Scrooge-like tyrant” by the Daily News). MSG properties now use facial recognition to scan the visages of all who enter, flag Dolan’s enemies, and ban those people from the events they’ve bought tickets to. Enemies in this case include every lawyer who works for a firm that is involved in a lawsuit against MSG, whether they worked on the case or not.

Multiple lawyers from multiple firms have been banned from both MSG performance venues and Knicks and Rangers games. Being lawyers, some of them have initiated yet more litigation against MSG for denying entry to valid ticket holders.

Kashmir Hill and Corey Kilgannon at the New York Times reported that MSG’s technology appears to scrape the headshots off offending firms’ websites and compare them to the faces of people who enter MSG properties. As you can see from the photo above, taken at Madison Square Garden in early January, each metal detector now has a camera poised above it, presumably so people can be flagged coming into the building.

Meanwhile, Vice published a story this week about NYC landlords using facial recognition to identify their rent-regulated tenants in order to catch them breaking their lease terms and therefore be able to evict them. The story highlights research by surveillance technology professor Erin McElroy from UT Austin, who has identified two different surveillance tech companies that specifically market using biometrics to try to evict tenants: Teman and Reliant Safety. Both companies have a strong foothold in NYC and market digital surveillance as a way for landlords to detect illegal sublets and evict rent stabilized tenants. In 2019, in a rare success, residents of Atlantic Plaza Towers in Brooklyn prevented facial recognition surveillance from being installed in their buildings.

There has been backlash to both the stories about MSG and the use of surveillance technology in housing. At a gut level, it feels creepy and wrong. But our elected officials have done very little to stop it. New York City, unlike Chicago, New Orleans (sort of), or San Francisco, has taken barely any steps to limit or ban the use of facial recognition technology here by either the government or private parties.

Watching Big Brother

Benjamin S. Burger, Digital Forensics Staff Attorney

Mayor Eric Adams is entering his second year in office and doubling down on the pro-surveillance rhetoric. In an interview with Politico, Adams stated that New York City has not “embraced” surveillance technology. He also added that elected officials have the wrong mindset when it comes to government surveillance and should instead think of it as “Big Brother is protecting you.”

Surveillance technology suffers from a number of issues. It doesn’t work. Or it produces racist results. Or it’s abused by law enforcement to spy on vulnerable populations. All of these issues have occurred in New York City and will continue to plague surveillance technology as long as politicians turn to “easy” answers on crime.

However, one overarching issue with all the deployed surveillance technology in New York City is that no one is conducting oversight over the deployment of the technology or how law enforcement uses it. For almost 15 years, the New York City Police Department and the Mayor’s Office had a secret agreement allowing the police to purchase surveillance technology outside the normal contracting process. The few laws we do have to hold the police accountable for the technology they use are extremely limited and practically ignored [PDF] by NYPD. Without any local entity, like the City Council, overseeing NYPD’s technology, we have situations where vendors and the police mislead about their own policies and regulations. Recently, the Legal Aid Society uncovered evidence that NYPD knew the location of ShotSpotter’s always recording gunshot detection sensors. This violated ShotSpotter’s internal policies and promises they had made to other groups. No one in city government was holding either ShotSpotter or NYPD accountable for their own policies.

With Mayor Adams looking to increase surveillance of the public and allow technology to intrude into the most private areas of our lives, it’s worthwhile to remember the ending of 1984:

''He was in the public dock, confessing everything, implicating everybody. He was walking down the white-tiled corridor, with the feeling of walking in sunlight, and an armed guard at his back. The long-hoped-for bullet was entering his brain . . . But it was all right, everything was all right, the struggle was finished. He had won the victory over himself. He loved Big Brother.''

In the Courts

Discovery Law Requires The Ability To Download Video

Joel Schmidt, Digital Forensics Staff Attorney

Following an overhaul of New York’s criminal discovery statute in 2020, it is now well established that in a criminal prosecution the prosecutor is required to disclose to the defense all body camera footage associated with the case. But is disclosure itself sufficient, or must the defense also be given an opportunity to download the video?

The answer to this question is important for two reasons. First, it determines how much the defense can make use of the video. For example, the defense may be prevented from enhancing the video if not presented with an original copy. Such enhancements can reveal license plate numbers and other information that can cast doubt on the prosecutions' case. Body camera footage can also reveal police misconduct, underscoring just how important it is to closely scrutinize all of the video.

Second, as part of the discovery statute overhaul the prosecution's discovery obligations are intertwined with their speedy trial obligations. A prosecutor's failure to comply with their discovery obligations can now result in a dismissal of the case. As relevant here, if a prosecutor is required to allow the defense to download a body camera video, and nonetheless prevents the defense from doing so, that can result in a complete dismissal.

In People v. Amir, the prosecution disclosed the body worn camera footage but did not give the defense the ability to download it, insisting it was not required to, and filed a Certificate of Compliance with the court in which they certified that they had complied with their discovery obligations under the law.

About five weeks later the prosecution reversed course and finally allowed the defense to download the video. The defense moved to dismiss the case arguing that the five week delay must be counted against the prosecution, which in this case meant the prosecution had exceeded the time for which they were required to be ready for trial.

The court agreed. In a decision handed down this past September the Honorable Wanda Licitra of the Bronx County Criminal Court held that by failing to allow the defense to download the video the prosecution failed to meet their discovery obligations, and because the five week period put them over the ninety days within which they were required to be ready for trial the case had to be dismissed (in addition to other independent grounds for dismissal).

The text of the new discovery statute supports the court’s ruling. Under the terms of the statute the prosecution is required to allow the defense “to discover, inspect, copy, photograph and test” the discovery. You can’t do any of those things with body camera footage if you’re unable to download the video.

Ask an Attorney

The following article is Part Three of a special three-part series on digital forensics/surveillance discovery in criminal cases. Part One addressed call detail records from wireless phone providers. Part Two covered portable reports and screenshots.

Jerome D. Greco, Digital Forensics Supervising Attorney

Part 3: ShotSpotter Records

ShotSpotter is an acoustic gunshot detection and location system. In other words, ShotSpotter sensors purportedly can distinguish between the sounds of gunshots and other loud noises in a designated coverage area, then provide an approximate location for where a gunshot was purportedly fired from. There are multiple issues with the ShotSpotter system and the company itself, including its effectiveness, invasion of privacy, and its contribution to police violence against communities of color. However, this article will focus on the basics of ShotSpotter related discovery in New York City: The Investigative Lead Summary, audio recordings, and the Detailed Forensic Report.

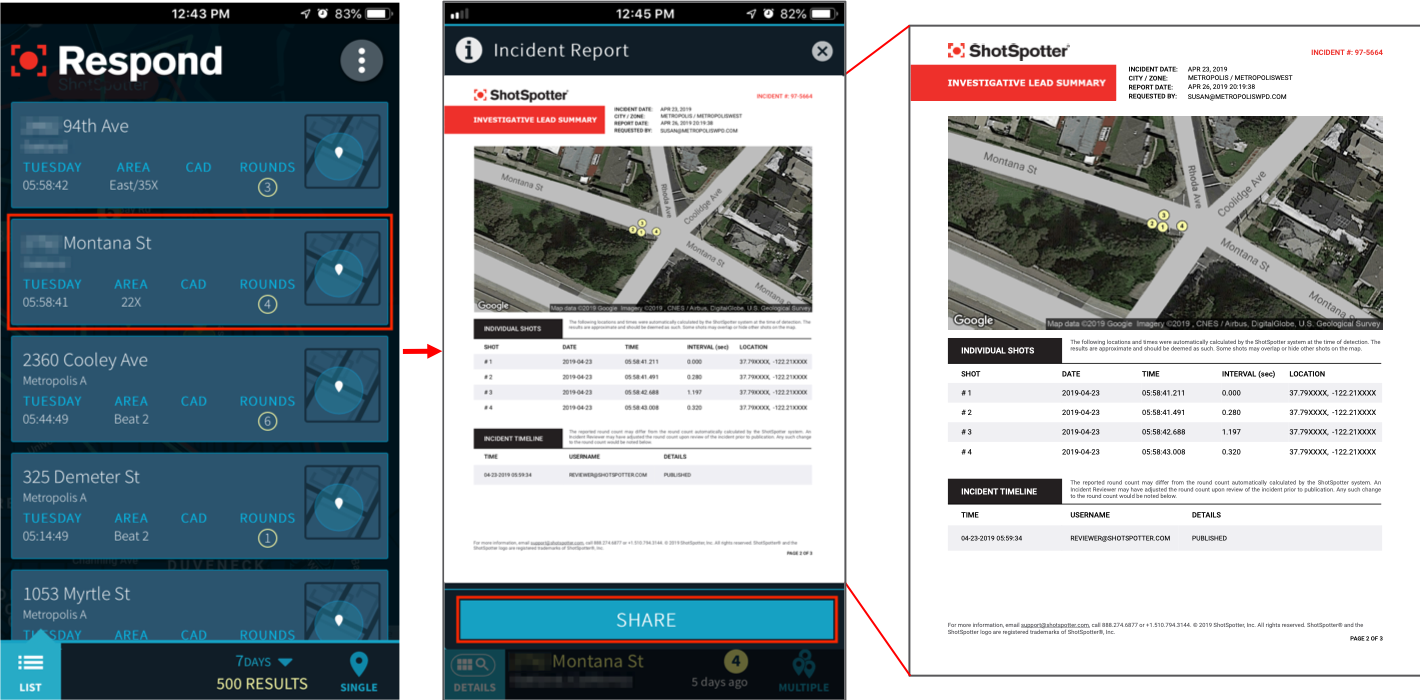

Investigative Lead Summary (ILS)

The Investigative Lead Summary, or ILS Report, is the initial ShotSpotter document that you should receive. It replaced the Enhanced Incident Report that used to be provided. The ILS Report is typically 3-4 pages long and will include a map indicating where the ShotSpotter system believes the gunshot(s) originated from, a confidence circle for the shot location(s), and the number of rounds fired. It will also have audio recordings from each sensor that identified the shot(s) with a listing of the range each sensor was from the incident. Many of these conclusions are questionable, and even ShotSpotter may change them later in a Detailed Forensic Report.

Audio Recordings

You should receive the audio recordings of the alleged shot(s) for each sensor that ShotSpotter claims detected them. They may be provided as separate MP3 files or, more commonly, they will be embedded in the PDF copy of the ILS Report.

When embedded in the ILS Report, you can click on the audio waveform graphs to listen to each recording. For some PDF programs, it will play directly in the PDF. For many others, it will open the recording in your web browser, or an audio program installed on your device. In all these possibilities, the recording is being streamed from ShotSpotter’s server. It is a good idea to preserve your own copy of the MP3 recordings, so you do not need to continuously connect to ShotSpotter. If ShotSpotter was to remove access to the recording in the future, you would then still have your own copy to play. Additionally, it is unknown if ShotSpotter keeps any data on how often or who accesses the recordings on their servers, which is information we do not want them to obtain.

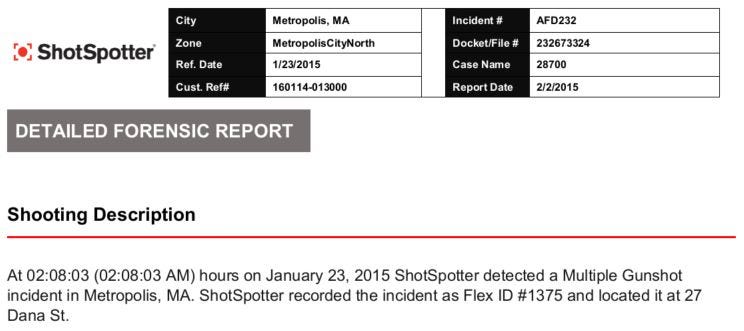

Detailed Forensic Report (DFR)

The Detailed Forensic Report is a document prepared by a ShotSpotter employee, and is approximately 10-15 pages long. It must be ordered by the prosecution or the police department before it will be created. Since it must be separately ordered and costs additional money for law enforcement, it is typically only requested when a case is nearing trial or a critical hearing. In New York City, the six prosecution offices (five borough District Attorneys and the Special Narcotics Prosecutor) order the report through a designated NYPD officer. The prosecution will call the ShotSpotter employee who prepared the report as an “expert” to admit the report into evidence and to interpret the ShotSpotter results for the judge and/or jury. As its name suggests, the DFR provides more detail about the location of the shot(s), the number of shots, and the process of determining that information. Sometimes you may notice that the DFR’s information contradicts the ILS Report. The ShotSpotter employee who prepares the DFR may adjust or fix the conclusions made in the ILS. For example, the DFR may state there were fewer gunshots than the ILS Report because the “expert” determined that one of the gunshots counted in the ILS was the echo of another gunshot, not a separately fired shot.

Upcoming Events

January 27, 2023

Artificial Intelligence And The Law: Overview, Key Issues And Practice Trends (NYSBA) (Virtual)

February 21, 2023-March 2, 2023

Magnet Virtual Summit (Magnet Forensics) (Virtual)

March 11-18, 2023

Open Data Week 2023 (Various Locations in NYC)

April 17-19, 2023

Magnet User Summit (Magnet Forensics) (Nashville, TN)

April 22, 2023

BSidesNYC (New York, NY)

April 27-29, 2023

16th Annual Forensic Science & The Law Seminar (NACDL) (Las Vegas, NV)

June 5-8, 2023

Techno Security & Digital Forensics Conference East (Wilmington, NC)

August 10-13, 2023

DEF CON 31 (Las Vegas, Nevada)

September 11-13, 2023

Techno Security & Digital Forensics Conference West (Pasadena, CA)

Small Bytes

Cops Can Extract Data From 10,000 Different Car Models’ Infotainment Systems (Forbes)

Apple Kills Its Plan to Scan Your Photos for CSAM. Here’s What’s Next (Wired)

Amazon, Ashton Kutcher And America’s Surveillance Of The Sex Trade (Forbes)

“Out Of Control”: Dozens of Telehealth Startups Sent Sensitive Health Information to Big Tech Companies (The Markup)

LAPD doesn’t fully track its use of facial recognition, report finds (Los Angeles Times)

A Roomba recorded a woman on the toilet. How did screenshots end up on Facebook? (MIT Technology Review)

Amazon Ring Cameras Used in Nationwide ‘Swatting’ Spree, US Says (Bloomberg)

Elite NYPD squad uses surveillance video to track and catch criminals: ‘We take a lot of pride in our work’ (New York Daily News)

They Called 911 for Help. Police and Prosecutors Used a New Junk Science to Decide They Were Liars. (ProPublica)

Innocent Black Man Jailed After Facial Recognition Went Wrong: Lawyer (Gizmodo)